AI & ML 101 – Jan 25¶

Intro to the workshop series¶

- My expectation: Explaining and discussing algorithms, discussing political and societal implications, ideally have a software project.

- I’d like a collaborative approach, I hope some members have some ML background

- Ideally everybody can understand everything

- do we want to have a project? If so, what?

The workhorse of Machine Learning (linear regression)¶

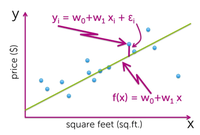

- Explain how to make predictions from data by fitting a line through data points

- Ideally, program a simple algorithm to do that (linear regression)

- Set up Python, PyCharm and a virtualenv for everybody who’s interested in programming

- slope of a line fitted through a list of points x and y is described by:

B1 = sum((x(i) - mean(x)) * (y(i) - mean(y))) / sum( (x(i) - mean(x))^2 ) - and the y value at x = 0 by:

B0 = mean(y) - B1 * mean(x)

- idea of the cost function cost function and that we want to minimize it

- The “magic” of ML derives from using many, many data and dimensions

- how is this aspect of ML (regression/extrapolation) relevant for us? How can we game it?

- maybe: tavsiye as an example for a simple recommender system (but probably no time for that)

Learning recommendations:¶

- https://machinelearningmastery.com/implement-simple-linear-regression-scratch-python/ (used as inspiration for this session)

- www.coursera.org/specializations/machin... (especially Course 1) (free AFAIK)

- www.coursera.org/learn/machine-learning (free)

- github.com/justmarkham/DAT4/blob/master... (free)

- https://www.safaribooksonline.com/library/view/learning-path-machine/9781491958155/ (not sure if free)

Multidimensional data (Feb 22)¶

Forgot the reading list last time, sorry, here again:

- https://machinelearningmastery.com/implement-simple-linear-regression-scratch-python/ (used as inspiration for this session)

- www.coursera.org/specializations/machin... (especially Course 1) (free AFAIK)

- www.coursera.org/learn/machine-learning (free)

- github.com/justmarkham/DAT4/blob/master... (free)

- https://www.safaribooksonline.com/library/view/learning-path-machine/9781491958155/ (not sure if free)

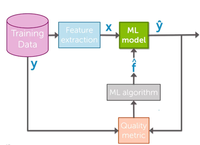

Generic description of the process used in “all” ML algorithms¶

In one-dimensional case (straight line that best fits data points) f equals the set of parameters that fully describe a line through the data: slope and intercept.

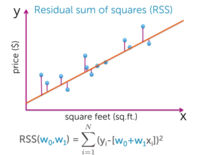

- Quality metric = “cost function” = error = sum of all individual deviations of actual data from prediction

Again for one-dimensional case¶

- Cumulative error:

- ML algorithm: steps to minimize that error

- WWYD?

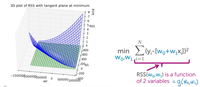

- This is how the error looks like:

- Finding the minimum means finding the point where derivatives wrt w0 and w1 are zero

- Analytically this is possible in one-dimensional case (hence the algorithm I gave you last week): setting the derivatives wrt w0 and w1 to 0 and solving for w0 and w1

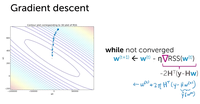

- RSS = sum((yi – (w0+w1xi)^2))

- gradient = d(RSS)/dw = dw0 (yi - (w0+w1xi)^2))), sum((d = -2 sum(yi-(w0+w1xi)), -2 sum((yi-(w0+w1xi)xi) = 0,0

- → w0 = sum(yi)/N – w1*sum(xi)/N = avg. house price – est. slope/avg. size

- → (plugging in w0 into bottom term of gradient) → w1 = (sum(xiyi)-sum(xi)sum(yi)/N)/(sum(xi^2)-sum(xi)^2/N)

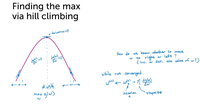

- Another possibility without doing actual calculus: Finding minimum by following the slope (aka derivative) downhill until there is no further downhill.

(here: maximum instead of minimum)

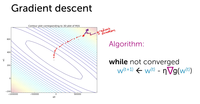

Gradient descent¶

The gradient always points along the steepest slope of a function at that point, analogous to the derivative which just points along the slope.

- in higher dimensions it is (in general) not feasible to do it analytically due to high complexity, but gradient descent always works (for Machine Learning stuff).

Higher dimensions? WTF?¶

- Housing prices dataset: there is more to a house than its size!

- What, for example?

- # of rooms, floor, age, …

- even bools like “has garage”, “is on lake front” etc.

- we also can add artificial features generated from the present ones by plugging them into a mathematical function (x^2, log(x), sin(x) etc.) to generate nonlinear models!

…

Gradient descent with multiple features¶

Neural networks (April 27)¶

- http://playground.tensorflow.org/

- Number recognition (MNIST)

- in nn_wtf or keras https://keras.io/

Further reading:¶

ML is not neutral¶

- Algorithms are neutral, the data they are fed aren’t

- Whoever owns the data has the advantage

- Hence the data hunger of modern IT companies: Intense economical pressure to collect as many data as possible

ML as an oppression tool¶

- police prediction software (e.g. www.propublica.org/article/machine-bias...

- facial recognition software as surveillance tool, FRS biased against people of color

- this (long) article about enshrining human biases in algorithms: https://medium.com/@blaisea/physiognomys-new-clothes-f2d4b59fdd6a

ML as a (possibly) unintentional oppression tool¶

- news feed curators (Facebook/US elections), search results (google autocomplete, see e.g. www.theguardian.com/technology/2016/dec... ) and, in general, hacking of these algorithms by outsiders

- hiring software

ML as a liberation tool¶

- Monitoring media for mass atrocity prevention (www.concordia.ca/research/migs/projects...

- creators.vice.com/en_us/article/counter...

- How to trick a neural network into thinking a panda is a vulture codewords.recurse.com/issues/five/why-d...

- creators.vice.com/en_us/article/counter...

- ???

- Bias in Machine Learning link list: https://flipboard.com/@becomingdatasci/bias-in-machine-learning-rv7p7r9ry

- http://www.techrepublic.com/article/bias-in-machine-learning-and-how-to-stop-it/

Evolutionary/genetic algorithms¶

This is maybe too technical so early. Should have a Neural Networks intro first. Or move it into NN module.

Natural Language Processing (if we find somebody with enough expertise to hold a workshop)¶

- Bayes filtering as an example everybody knows

Recommender systems?¶

- tavsiye as an example for a simple recommender system

Unsupervised learning/clustering¶

Dumping ground (stuff that has no home)¶

Also (possibly) on the menu¶

- brief introduction to scikit-learn and pandas

- virtualenvs? https://virtualenvwrapper.readthedocs.io/en/latest/

- overfitting & underfitting

- regularization

- finding interesting parameters (lasso regularization)

- Training/validation/test data

Possible topics:¶

- Current status quo

- Where does all this lead?

- Social implications of technical development

- Commodification of ML (“everybody” can do ML stuff)

- What are you planning? Projects, ideas?

- Practical examples, other Languages, ML as a service

Link list¶

Drop your links here!

Deep Learning Frameworks¶

- https://keras.io/ (Neural Networks abstraction layer)

- mxnet.io/get_started/index.html (another Deep Learning framework)

Tricking ML¶

- www.fastcodesign.com/3068556/reminder-y... The Art Of Manipulating Algorithms

- codewords.recurse.com/issues/five/why-d... How to trick a neural network into thinking a panda is a vulture

- www.heise.de/newsticker/meldung/Angriff...

- https://www.flickr.com/photos/stml/33411791166/in/album-72157679746550690/ Traps for autonomous cars (art project, no idea if it actually works)

- creators.vice.com/en_us/article/counter...

Data sets¶

- http://thinknook.com/twitter-sentiment-analysis-training-corpus-dataset-2012-09-22/ Twitter Sentiment Analysis Training Corpus

Misc¶

- http://www.pyimagesearch.com/ (deep learning and image recognition blog)

- kevinhughes.ca/blog/tensor-kart TensorKart: self-driving MarioKart with TensorFlow

|

Learning machine process, also known as machine learning, is a revolutionary field of artificial intelligence that empowers computers to automatically learn and improve from experience without explicit programming. This transformative technology has found applications in diverse sectors, from natural language processing and image recognition to predictive analytics and autonomous vehicles. As businesses and industries increasingly embrace this cutting-edge approach, it becomes crucial for stakeholders to stay updated with the latest advancements and go to the official site to buy the best industrial machines that leverage machine learning capabilities, ensuring enhanced productivity, efficiency, and innovation. |

|